🔴 TTS

⚪ 시나리오

🔔 TTS 테스트를 진행하고 싶은 텍스트를 입력한 후 서버로 전송한다.

🔔 서버에서 일정 chunk 단위로 나눠서 입력한 텍스트에 맞는 음성 Raw 데이터가 웹 소켓 통신으로 도착한다.

🔔 음성 데이터를 버퍼에 저장한 후 오디오로 재생시킨다.

⚪ 코드

const TtsScreen = () => {

const inputText = useSelector(state => state.tts.inputText);

const isPlaying = useSelector(state => state.tts.isPlaying);

const dispatch = useDispatch();

// recorder

const [player] = useState(() => new Player());

const handleText = (text) => {

dispatch(setInputText(text));

}

const handleBtn = async () => {

if (isPlaying) return;

if (inputText) {

if (player.audioContext) {

player.audioContext.resume().then(() => {

player.connect(inputText, scenario.url,

() => { dispatch(setIsPlaying(false)); }

);

})

}

dispatch(setIsPlaying(true));

} else toastError("먼저 문장을 입력해주세요.");

}

useEffect(() => {

player.init();

}, []);

return (

<>

<textarea text={inputText} onChange={(text) => {handleText(text)}} />

<button onClick={() => handleBtn()} />

</>

)

}const Player = function() {

this.audioContext = null;

this.buffers = [];

this.source = null;

this.playing = false;

this.init = function() {

const audioContextClass = (window.AudioContext ||

window.webkitAudioContext ||

window.mozAudioContext ||

window.oAudioContext ||

window.msAudioContext);

if (audioContextClass) {

return this.audioContext = new audioContextClass();

} else {

return toastError("오디오 권한 설정을 확인해주세요.");

}

}

this.addBuffer = function(buffer) {

this.buffers.push(buffer);

}

this.connect = function(inputText, url, onComplete = f=>f) {

const self = this;

const path = `ws://112.220.79.221:${url}/ws`;

let socket = new WsService({

path : path,

onOpen : function(event) {

console.log(`[OPEN] ws://112.220.79.221:${url}/ws`);

socket.ws.send(inputText);

console.log(`[SEND] ${inputText}`)

},

onMessage : function(event) {

console.log("[MESSAGE]");

console.log(event.data);

if (event.data.byteLength <= 55) return;

self.addBuffer(new Int16Array(event.data));

self.play();

},

onClose : function(event) {

console.log("[CLOSE]");

socket.ws = null;

onComplete();

}

});

}

const wait = function() {

this.playing = false;

this.play(); // 다음 chunk 오디오 데이터 재생

}

this.play = function() {

if (this.buffers.length > 0) {

if (this.playing) return;

this.playing = true;

let pcmData = this.buffers.shift();

const channels = 1;

const frameCount = pcmData.length;

const myAudioBuffer = this.audioContext.createBuffer(channels, frameCount, 22050);

// 화이트 노이즈로 버퍼를 채운다.

for (let i = 0; i < channels; i++) {

const nowBuffering = myAudioBuffer.getChannelData(i, 16, 22050);

for (let j = 0; j < frameCount; j++)

nowBuffering[j] = ((pcmData[j] + 32768) % 65536 - 32768) / 32768.0;

}

pcmData = null;

this.source = this.audioContext.createBufferSource();

this.source.buffer = myAudioBuffer;

this.source.connect(this.audioContext.destination);

this.source.start();

this.source.addEventListener("ended", wait.bind(this)); // 오디오 종료 시 이벤트

}

}

}🔴 Web Audio API

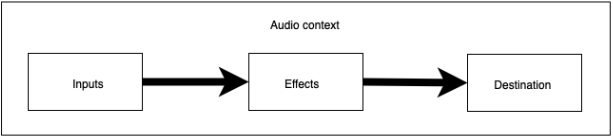

🔊 AudioContext 객체는 여러 개의 Audio Node들로 구성되어 있다.

🔊 일반적인 작업 흐름에 따라 Inputs(입력), Effects(효과), Destination(출력) 노드 순서로 이루어진다.

🔊 필요에 따라 노드들을 생성하고, 각 노드들을 연결해줘야 한다.

AudioContext 생성

🔥 Web Audio API의 모든 기능은 AudioContext 객체를 생성하면서 시작된다.

// Audio Context를 Webkit/Blink 브라우저 버전을 포함하여 생성

const audioContext = new (window.AudioContext || window.webkitAudioContext)();AudioBuffer 생성

// 오디오를 재생시키기 위해 필요한 오디오 버퍼를 생성

const audioBuffer = this.audioContext.createBuffer(numOfChannels, length, sampleRate);

// length는 numSeconds x sampleRate의 곱으로 표현되기도 한다.AudioBufferSourceNode 생성

🔥 AudioBufferSourceNode는 AudioBuffer를 음원으로 입력받는 객체이며, 주로 45초 이내의 짧은 오디오를 단 1회 재생하는 용도로 사용된다. 한 번 재생되면 가비지컬렉터에 의해 제거된다.

🔥 45초 이상의 긴 오디오는 MediaElementAudioSourceNode를 사용하도록 권장된다.

// 메서드 방식으로 AudioBufferSourceNode 객체를 생성한다.

// 생성자 방식은 일부 브라우저에서 지원되지 않을 수도 있다.

const audioBufferSourceNode = audioContext.createBufferSource();

// AudioBuffer를 음원으로 주입해준다.

audioBufferSourceNode = audioBuffer;Audio Graph 연결

audioBufferSourceNode.connect(audioContext.destination);음원 재생

audioBufferSourceNode.start();const WsService = function({path, onOpen = f=>f, onMessage = f=>f, onClose = f=>f}) {

this.ws = new WebSocket(path);

this.initMsg = '{"language":"ko","intermediates":true,"cmd":"getsr"}';

this.ws.binaryType = "arraybuffer";

this.ws.onopen = function(event) { onOpen(event); };

this.ws.onmessage = function(event) { onMessage(event) };

this.ws.onclose = function(event) { onClose(event) };

}

🟠 STT

⚪ 시나리오

🔔 STT 테스트 진행을 위한 오디오 녹음을 위해 웹 마이크 권한을 허용해준다.

🔔 녹음 버튼을 누르고 발화를 진행하며, 발화 인식은 지속적으로 진행된다.

🔔 STT 테스트를 중단하고 싶으면 버튼을 다시 클릭하면 된다.

⚪ 코드

const SttScreen = () => {

const inputText = useSelector(state => state.stt.inputText);

const isPlaying = useSelector(state => state.stt.isPlaying);

const dispatch = useDispatch();

// ws

const [recorder] = useState(() => new Recorder());

const handleText = (text) => {

dispatch(setInputText(text));

}

const handleBtn = useCallback(event => {

dispatch(setIsPlaying(!isPlaying));

if (isPlaying) {

recorder.stop();

} else {

dispatch(setInputText(""));

recorder.start();

}

}, [isPlaying]);

useEffect(() => {

recorder.init({

path : scenario.url,

// 녹음 시작

onStart : function(event) {

console.log("녹음 시작 : 마이크 on 해줄것");

setSequence({

segments : [0, 60],

forceFlag : false,

})

dispatch(setIsPlaying(true));

},

// 인식된 결과 전달받음

onResult : function(text, isRepeat) {

console.log("결과 받았음 : 화면에 추가해줄것");

dispatch(setInputText(text));

},

// 종결 전달 받음

onClose : function(event) {

console.log("결과 전달 끝났음 : 마이크 off 해줄것");

setSequence({

segments : [0, 1],

forceFlag : false,

})

dispatch(setIsPlaying(false));

},

// 에러 발생

onError : function(event) {

toastError("서버와의 연결을 실패하였습니다.");

}

});

}, []);

return (

<>

<textarea text={inputText} onChange={(text) => {handleText(text)}} />

<button onClick={() => handleBtn()} />

</>

)

}

export default SttScreen;const Recorder = function() {

this.serverUrl = null;

this.audioContext = (window.AudioContext || window.webkitAudioContext || window.mozAudioContext || window.oAudioContext || window.msAudioContext);

this.context = null; // new audioContext

this.audioInput = null;

this.recorder = null;

this.recording = false;

this.stream = null;

this.wsServiceConfig = {

repeat : "repeat",

drop : "nodrop",

};

this.callback = {

onStart : f=>f,

onResult : f=>f,

onClose : f=>f,

onError : f=>f,

}

this.webSocket = null;

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia;

const self = this;

this.init = function({path, onStart, onResult, onClose, onError}) {

// 서버 초기 연결 : repeat / drop 설정

self.serverUrl = `wss://ai-mediazen.com:${path}/ws`;

const wsService = new WsService({

path : self.serverUrl,

onOpen : function(event) {

if (wsService.ws.readyState === 1) {

wsService.ws.send(wsService.initMsg);

}

},

onMessage : function(event) {

const _arr = event.data.split(",");

self.wsServiceConfig.repeat = (_arr[1] === "0")? "norepeat" : "repeat";

self.wsServiceConfig.filedrop = (_arr[2] === "0")? "nodrop" : "drop";

wsService.ws.close();

},

});

// 콜백 저장

self.callback.onStart = onStart;

self.callback.onResult = onResult;

self.callback.onClose = onClose;

self.callback.onError = onError;

}

this.setup = async function() {

try {

this.stream = await navigator.mediaDevices.getUserMedia({ audio : {optional: [{echoCancellation:false}]}, video : false });

} catch (err) {

return console.log("getStream 오류");

}

this.context = new this.audioContext({

sampleRate : 16000,

});

this.audioInput = this.context.createMediaStreamSource(this.stream);

const bufferSize = 4096;

this.recorder = this.audioInput.context.createScriptProcessor(bufferSize, 1, 1); // mono channel

this.recorder.onaudioprocess = function(event) {

// 녹음 데이터 처리

if (!self.recording) return;

self.sendChannel(event.inputBuffer.getChannelData(0));

}

this.recorder.connect(this.context.destination);

this.audioInput.connect(this.recorder);

}

this.sendChannel = function(channel) {

console.log("[Recorder] process channel");

const dataview = this.encodeRAW(channel);

const blob = new Blob([dataview], { type : "audio/x-raw" });

// send : 최초 1회 연결

if(!self.webSocket) {

self.webSocket = new WsService({

path : self.serverUrl,

onOpen : function(event) {

console.log("open");

self.webSocket.ws.send("{\"language\":\"ko\",\"intermediates\":true,\"cmd\":\"join\"}");

},

onMessage : function(event) {

console.log(event.data);

const receiveMessage = JSON.parse(event.data);

const payload = JSON.stringify(receiveMessage.payload);

const textMessage = JSON.parse(payload);

if (receiveMessage.event === "reply") {

console.log("시작");

self.recording = true;

}

if (receiveMessage.event === "close") {

console.log("closed");

if(textMessage.status){

self.callback.onResult(event, "norepeat");

}

self.webSocket.ws.close();

self.webSocket = null;

self.stop();

self.callback.onClose(event);

}

// 결과물

else if (textMessage.text) {

self.callback.onResult(textMessage.text, self.wsServiceConfig.repeat);

}

else if (textMessage.stt) {

self.callback.onResult(textMessage.stt[0].text, self.wsServiceConfig.repeat);

}

}

});

}

if(self.webSocket.ws.readyState === 1) {

if(blob.size > 0) self.webSocket.ws.send(blob);

}

}

this.encodeRAW = function(channel) {

const buffer = new ArrayBuffer(channel.length * 2);

const view = new DataView(buffer);

let offset = 0;

for (let i = 0; i < channel.length; i++, offset+=2){

const s = Math.max(-1, Math.min(1, channel[i]));

view.setInt16(offset, s < 0 ? s * 0x8000 : s * 0x7FFF, true);

}

return view;

}

this.start = function() {

console.log("[Recorder] start");

if(!self.recorder) {

this.setup();

}

self.recording = true;

}

this.stop = function() {

console.log("[Recorder] stop");

this.close();

self.recording = false;

}

this.close = function() {

if(this.webSocket && this.webSocket.ws.readyState === 1) {

console.log("closed");

this.webSocket.ws.send("{\"language\":\"ko\",\"intermediates\":true,\"cmd\":\"quit\"}");

}

}

}

export default Recorder;🔴 Web Audio API 오디오 녹음

AudioContext 생성

const audioContext = (

window.AudioContext ||

window.webkitAudioContext ||

window.mozAudioContext ||

window.oAudioContext ||

window.msAudioContext

);

const context = audioContext({ sampleRate: 16000, });🔊 audioContext를 생성하면서 옵션으로 sampleRate 16000을 설정한다.

MediaStream 생성

const stream = await navigator.mediaDevices.getUserMedia({

audio : {optional: [{echoCancellation:false}]},

video : false

});🔊 사용자에게 미디어 입력 장치 사용 권한을 요청하며, 사용자가 수락하면 요청한 미디어 종류의 트랙을 포함한 MediaStream을 반환한다.

MediaStreamAudioSourceNode 생성

const audioInput = context.createMediaStreamSource(stream);🔊 MediaStream을 매개변수로 하여 오디오를 재생하고 조작할 수 있는 객체인 MediaStreamAudioSourceNode을 생성한다.

ScriptProcessorNode 생성 (Deprecated)

const bufferSize = 4096;

const recorder = this.audioInput.context.createScriptProcessor(bufferSize, 1, 1); // mono channel

recorder.onaudioprocess = function(event) {

// 녹음 데이터 처리

self.sendChannel(event.inputBuffer.getChannelData(0));

}🔊 ScriptProcessorNode 인터페이스는 두 개의 버퍼에 연결된 오디오 노드 처리 모듈이다.

🔊 하나는 입력 오디오 데이터를 포함하고, 다른 하나는 처리된 출력 오디오 데이터를 포함한다.

🔊 AudioProcessingEvent 인터페이스를 구현하는 이벤트는 입력 버퍼에 새 데이터가 포함될 때마다 객체로 전송되며, 출력 버퍼를 데이터로 채우면 이벤트 핸들러가 종료된다.

Audio Graph 연결

recorder.connect(this.context.destination);

audioInput.connect(this.recorder);

'React > Concept' 카테고리의 다른 글

| [ React ] Props (0) | 2022.07.14 |

|---|---|

| [ React ] Hook (0) | 2022.05.28 |

![[ React ] 웹 소켓 통신 + Web Audio API](https://img1.daumcdn.net/thumb/R750x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FuB0Ek%2FbtrDPHnjZO6%2FhQFBfhm2vT5J3wUf1jbpK1%2Fimg.png)